Fin’s WFH Story: Coding in the Age of AI

The last article was about WFH specifically, and I had some thoughts as someone who works from home as a programmer...

Coffee mug in hand. Hoodie on. Code editor glowing in the dim morning light.

That’s how most of my work-from-home days begin.

I’m a programmer — and like many others in my field — I’ve been watching AI evolve from something “interesting” to something that feels world-changing. Everyone’s talking about it, and everyone’s asking the same question:

Is programming still a safe career?

Let’s talk about that.

The Morning of a Modern Coder

Before diving into deep neural networks and token limits, I want to start with something real:

The quiet fear that many of us feel.

When ChatGPT, Gemini, Claude, and the rest showed they could write code, I’ll admit — it gave me a jolt. I wondered if I was about to be outpaced by a tool that never sleeps, never drinks too much coffee, and never gets stuck on an error message.

But after months of actually using these tools — integrating them into my workflow — I’ve realized something important: they don’t replace programmers. They amplify them.

How Large Language Models (LLMs) Actually Work

Before deciding whether AI will replace us, it helps to understand how it thinks — or more precisely, how it doesn’t.

LLMs like GPT or Claude don’t “understand” in the human sense. They don’t know what a loop or a variable means.

They work on probability — given a string of words (or “tokens”), they predict what the next most likely token is.

Imagine teaching someone to finish your sentences by reading billions of paragraphs from the internet. They’d get good at guessing what sounds right, but that’s not the same as truly knowing what’s right.

Neural Networks in a Nutshell

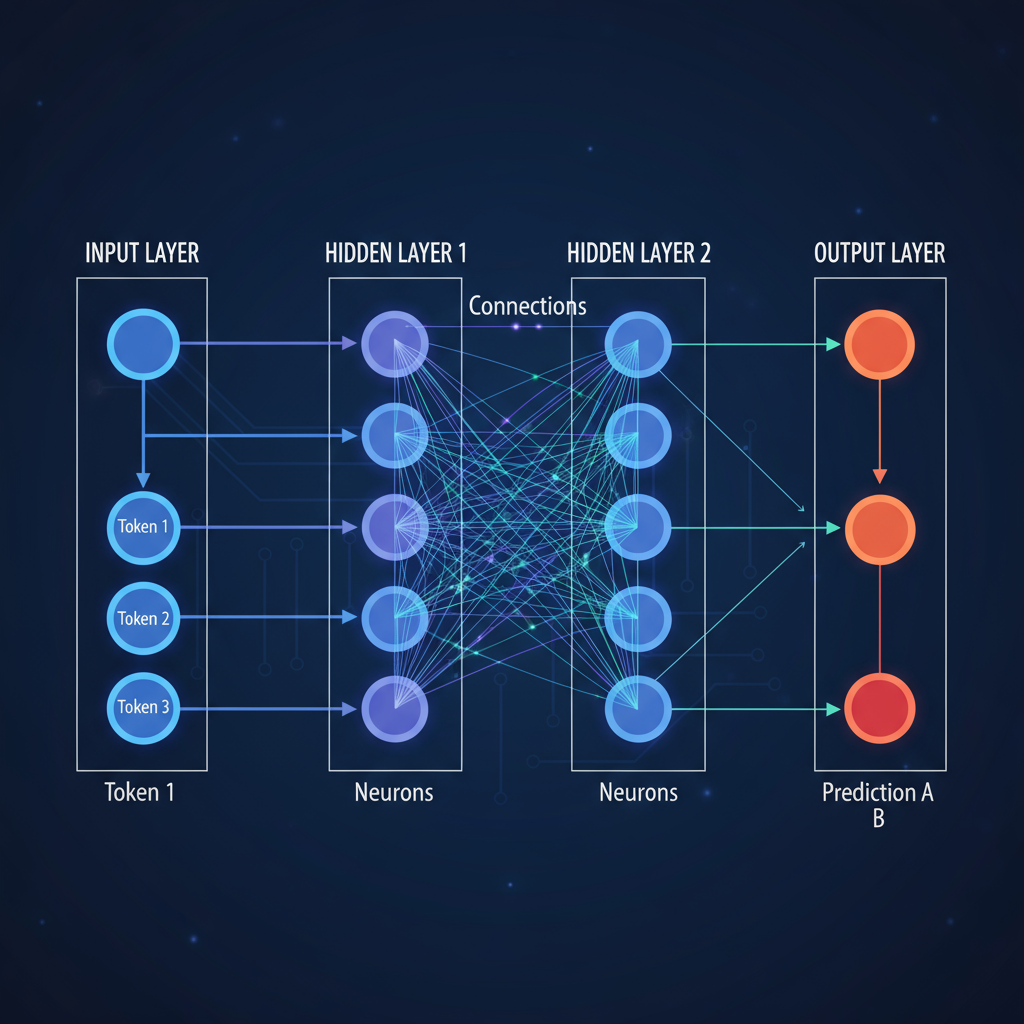

An LLM is built on something called a Transformer neural network — a structure designed to understand relationships between words in a sequence.

When you type a prompt like:

def fibonacci(n):

The model looks at billions of past examples of code that start this way and calculates — statistically — what likely comes next (if n <= 1: return n).

Each neuron in the network processes a fragment of meaning.

Attention layers help it focus on relevant words.

Embeddings map each word to a high-dimensional space representing context.

It’s math. Beautiful math. But still math.

There’s no reasoning, no real-world understanding — only pattern recognition at staggering scale.

Context Windows: Why AI Can’t “See” Your Whole Project

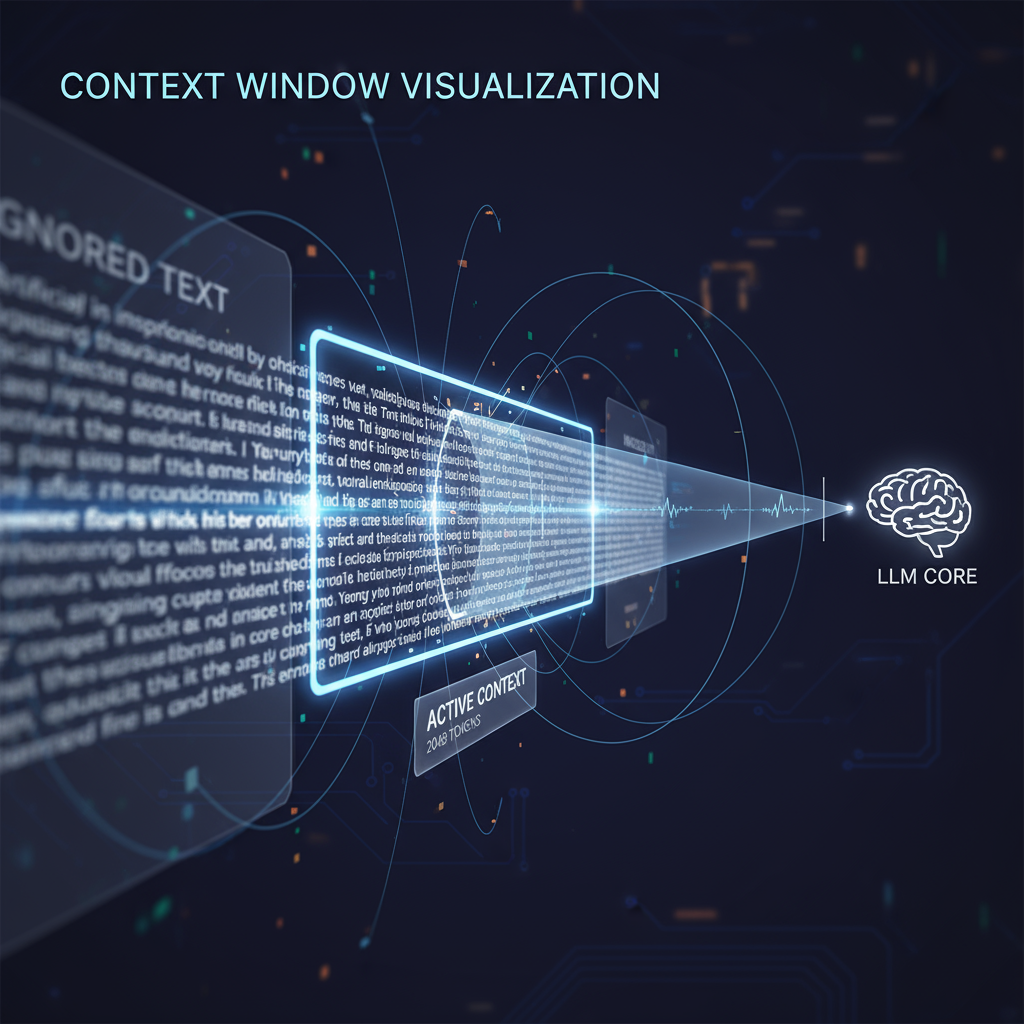

Even the most advanced models have a limit — called a context window — which caps how much information they can process at once.

A model might handle 100,000 tokens (around 75,000 words), but your company’s codebase could have millions.

That means it can’t keep the entire system in mind. It might understand a single function, or even a full file, but it loses awareness of broader dependencies — things humans intuitively remember.

This is why AI might rename a variable correctly in one file but forget it exists in another.

The Compute Wall and Data Ceiling

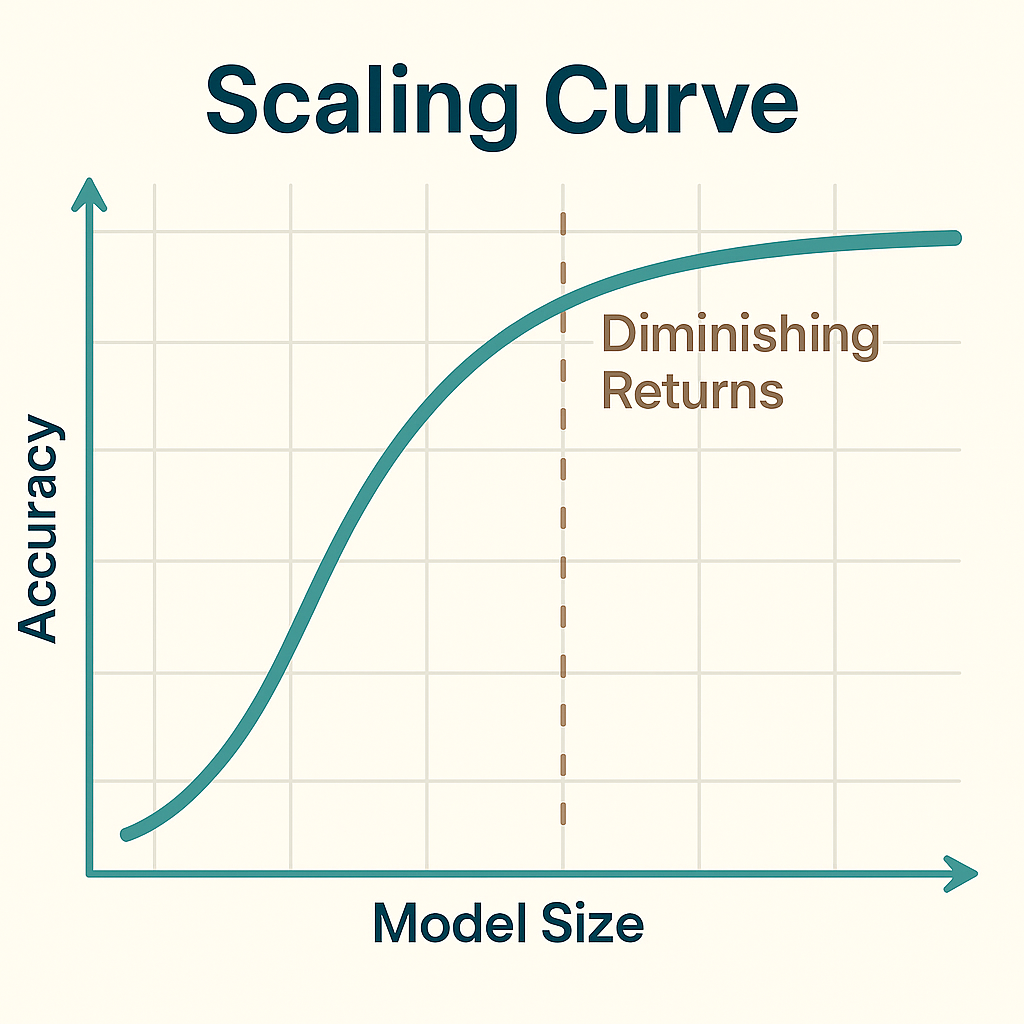

LLMs get better by training on more data and with more computing power — but both have limits.

- Compute costs: Training GPT-4-class models costs hundreds of millions of dollars.

- Data exhaustion: The best training data (clean, high-quality human text) is nearly maxed out.

- Physical scaling limits: You can only fit so many GPUs into a data center before power and heat become problems.

We’re not running out of innovation, but we are running into physics.

That’s why many researchers believe we’re approaching a plateau. New advances will come from smarter architectures, not just bigger models.

The Human Programmer’s Edge

So where does that leave us?

In a surprisingly good place.

Humans bring context. We don’t just know what to write — we know why.

We understand that a “login” system isn’t just code — it’s security, compliance, user experience, and trust.

AI doesn’t have intuition or purpose. It can’t see unintended consequences.

It’s like the difference between a GPS and an explorer. The GPS knows the roads; the explorer can build new ones.

Programming with AI: The New Workflow

Here’s what modern programming is starting to look like for me:

- I use AI for boilerplate — spinning up quick functions, regex, or documentation.

- I use GitHub Copilot for inline suggestions that speed up repetitive tasks.

- I still review, test, and rewrite — because AI code can look perfect but fail spectacularly.

- I use it as a second brain, not a replacement.

And when something breaks in production, it’s still me debugging at 2 a.m. — not the AI.

Why Many Companies Can’t Fully Adopt AI Yet

Another under-discussed reason why AI won’t take over soon: liability.

Feeding private codebases into third-party AI tools risks exposing proprietary data.

Legal frameworks for IP ownership of AI-generated code are still murky.

Many enterprise environments forbid such integrations outright.

So while small teams or solo devs experiment freely, big organizations move cautiously.

The Future: Hybrid Intelligence

We’re heading toward an era of collaborative coding — human intent, AI assistance.

The best developers of tomorrow will likely be AI-native: people who know how to prompt, structure, and guide LLMs efficiently.

Learning Python or Machine Learning fundamentals is still a fantastic path. It helps you understand how AI thinks — and why it fails.

You don’t need to fear AI. You just need to learn to speak its language.

Fin’s Final Thoughts

Programming from home used to mean flexibility. Now, it means resilience.

AI has changed the game, but not the goal.

It’s another evolution — like going from assembly to Python, or from punch cards to IDEs.

The craft is still alive, still growing, and still deeply human.

And while AI might one day write great code, it still needs someone — a real, breathing someone — to tell it what to build.

So keep coding, keep learning, and keep the coffee warm.

Fin’s not going anywhere.